I’ve never been a fan of the “big data” moniker. Originally intended to convey the hopelessness of assimilating massive data sets, it has devolved into a product category instead of invoking a “thar be demons” fight-or-flight response in IT pros.

And big data really should scare you, especially when it comes to operations logging. When I think I about how much data that can create, I end up in awe of the scale of logging in public cloud systems. Consider that AWS and Azure have a fundamental need to log everything — not least so they can charge you for everything. Then scale that micro level of data logging to the millions and millions of resources in the hundreds of thousands of AWS accounts and Azure subscriptions and you end up with the kind of galactic sizing that humans have a hard time grokking. To spice up the sauce, add in the typical enterprise’s own log generation. In five words, it’s a lot of data. 🙂

Finding any actionable security information in these data sets is a cosmic-level search for a needle in a haystack. Like I said, big data oughta scare you.

Step one has been, so far, to ingest it all in a consolidated store for analysis. Splunk comes to mind here and is the choice for many enterprises; there are numerous others. In fact, every one of my clients has a product in this class and they all say the same two things about it. One point they are happy to acknowledge; the other they’d rather not.

They’re all happy to tell you it costs a fortune to ingest the cosmos. They’re not so happy to say that they don’t get as much value as they’d hoped despite paying for a planet’s worth of log data. It’s a universe-sized case of data indigestion.

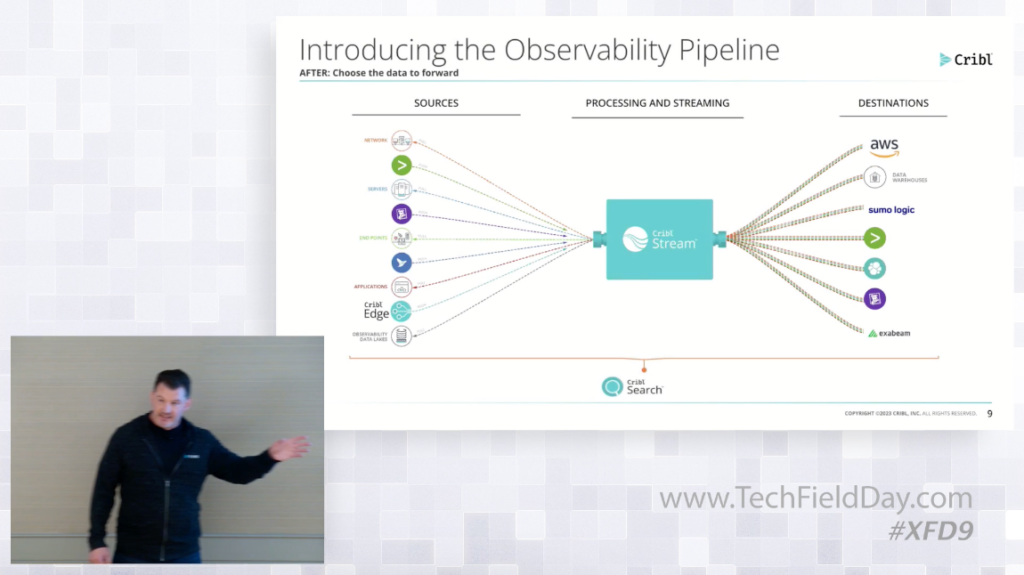

Today, at Security Field Day #XFD9, Cribl showed two products that attempt to order the big data log cosmos. Stream is the more interesting and mature of the two. (Be sure to watch the video presentation for details on the second, newer product Search.)

As the capture below from the presentation shows, Stream not only accepts all incoming log fire hoses, it can ingest that data to any destination.

Much more interesting is Stream’s ability to intelligently eliminate data that is, most probably, just noise. The example we were shown was a rule that drops DNS queries, which can be large, from the ingested stream.

Cribl’s comments suggested this benefit is primarily cost-savings. Clearly, that’s a good thing. Galactic data space is expensive, especially when you are likely to keep the data for a long time — maybe forever if you are in a regulated industry.

But I think there’s a more important benefit. If you know in advance that you don’t care about certain DNS log entries — they really don’t help you understand anything useful about the security environment at any given time — by dropping them from the store you make the finding-a-needle-in-a-galaxy problem a bit smaller.

It puts me in mind of the difference between a lossless audio stream and an MP3. Perceptual coding used in compression algorithms dictates that if the oboe is too soft to hear over the trumpet in the orchestra at that moment in the score, you can drop the data (sound) produced by the oboe and the listener won’t notice. By contrast, the lossless stream will contain the oboe’s sound data even though you can’t hear it.

Likewise in Stream, if you know the enterprise (the orchestra) doesn’t need to “hear” the oboe (specific DNS lookups), you not only won’t notice its absence, your analysis will actually be “higher fidelity” because the elimination of oboe sound data focuses attention on the trumpet.

So, using products like Stream not only has a cost benefit — it also helps surface the things you need to know in the data you do keep. That can help you grok the big data cosmos.

Leave a Reply