Imagine being able to accomplish many Azure administrative tasks in an object-aware, fully-integrated scripting language without having to deploy a server to run scripts on and only paying for what you use. That’s the promise of Azure Functions written in PowerShell.

The sample function described here scans a list of subscriptions retrieved from blobs in a storage account, looking in each subscription for key vaults. If it is properly authorized via a managed service identity the function uses the Azure PowerShell cmdlet Backup-AzKeyVaultSecret to make a secure copy of the secrets in each key vault in a subscription.

Backup-AzKeyVaultSecret is very interesting. It’s no surprise that it encrypts the backed-up secret. But you might be surprised to learn that the cmdlet restricts restoring the secret to the same subscription and region from which it was backed up. Restore-AzKeyVaultSecret will fail if you attempt to restore a secret created by Backup-AzKeyVaultSecret to an Azure subscription or region other than the original one. This restore restriction means we can create “subscription/region paired” backups of key vault secrets. It’s elegant to use a subscription in a specific region as a security boundary for key vault secret backups. It scopes the level of potential loss.

I have to admit that when I started out developing this function for a client, I thought it would be easy. After all, the PowerShell script and logic itself is quite simple. What I didn’t know was that the state of the tooling, particularly the Visual Studio Code Azure Functions extension, would be such a hot mess. Worse, Microsoft uses the apparently endless preview status of the VS Code extension as an excuse to delivery lousy documentation and unreliable functionality while tolerating different product groups pointing fingers at each other. See the response I got to reporting the inability to locally debug a PowerShell Azure Function. (Update 2021-02-14 : it’s actually much worse than what I documented in that issue. See this new problem I reported on GitHub.) While Azure Functions support for PowerShell scripts is generally available, the development tools in VS Code are largely still in preview. Hopefully, in the near future Microsoft — known for its developer tooling — will fix the toolset. But currently, the only fair characterization is that it’s a disaster.

But don’t let that stop you! Believe me, learning to create PowerShell Azure Functions will be worth the journey. And that’s the real purpose of this blog post: to help you get started. While I can’t detail all the individual steps to create your first function — there are just too many — I can point out some details you will need to know. And I hope that providing a very simple sample you can modify yourself will help get you started.

First, about that “serverless” computing. Guess what? Your function runs on a server — in the case of Azure Functions written in PowerShell, it’s a real live Windows server running in Azure’s App Service. The difference from traditional deployment is that the server environment always presents the same interface to an app or function. That allows the server to “disappear” with respect to a function. You just write the PowerShell function using Azure Functions services like triggers and bindings — which are always the same — and it’ll execute in App Service without you having to manage a server.

Let’s start with what you need to develop Azure Functions in PowerShell.

- A development subscription. Once you get the hang of how it all works together, you may want to switch to the destination subscription because you will have to recreate a managed identity and all the associated permissions.

- Visual Studio Code and the following extensions:

- PowerShell

- Azure Functions

- Azure Storage Explorer for viewing the results of the backup and for uploading the lists of subscriptions containing key vaults to be backed up

- Azure Functions Core Tools. This is supposed to install automatically the first time you try to run a function locally. But beware — on macOS it issues all kinds of error messages when you try to run it that boil down to needing a different version of .Net Core than it automatically installs. (Like I said, the tooling is a disaster.)

Once you have all these components in working order, download the sample code below and give it a try. Here are some notes on how to set it up.

- Start by creating an empty function in VS Code using the Azure Functions extension. This will set up the folder structure you will need to deploy the Azure Function. After deployment, you can paste the sample code below into the appropriate files in the empty function and customize to your needs.

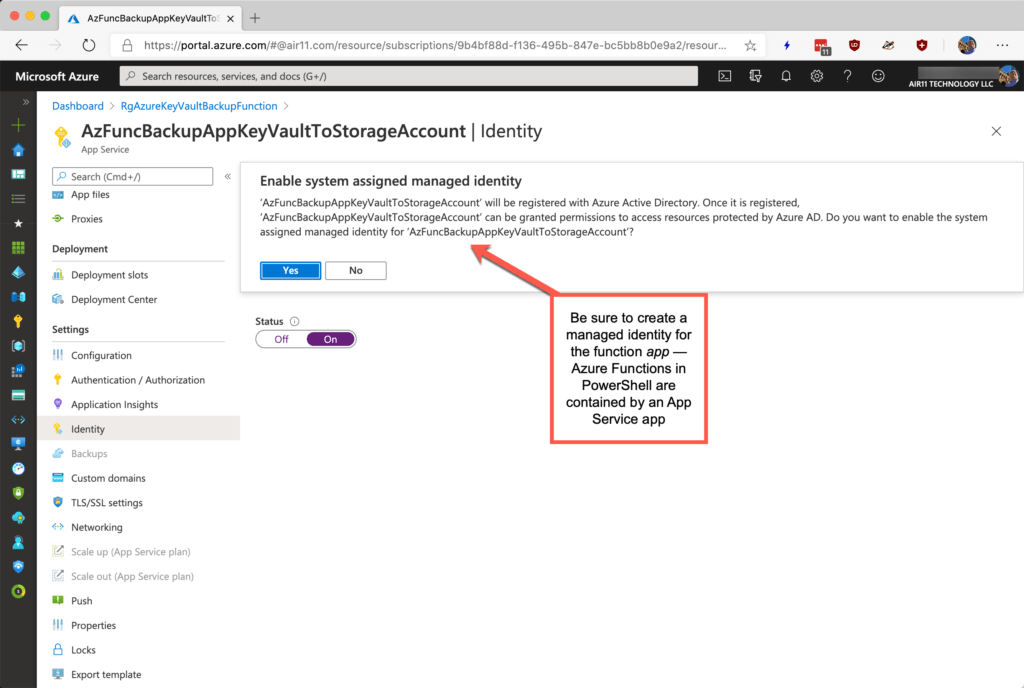

- Deploy the empty function to Azure. This will create the App Service app. In turn, that will allow you to create the managed identity you need.

- I don’t like proliferating storage accounts. So the script uses the same storage account to store the backed-up secrets that Azure Functions uses for its own purposes. You will notice the PowerShell code avoids those containers.

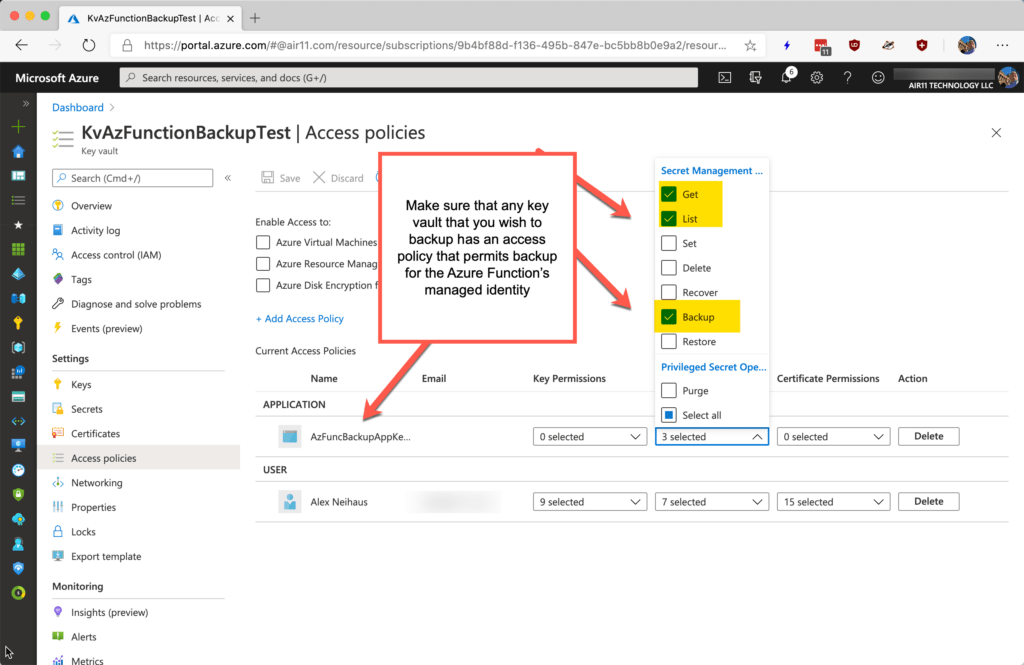

- Set up a test key vault and add some secrets. To test your permissions, set up a second key vault but do not add the function app’s managed service identity to that second vault’s access policies. When you get the PowerShell function running, this will be a good test of the permissions you have established. The Azure Function script should skip a key vault in a subscription it otherwise has access to but which has no key vault access policies granting backup access to the function.

I’ve thought hard about how to document this Azure Function. There’s just so much one can cram into a blog post — and this one is already quite long. So, rather than add much more text, here is a collection of screens shots that I hope will highlight some things to consider. Because this Azure Function is so simple, you could just run it in a standard PowerShell console (assuming you set up access correctly), modify it to your needs and only then try it as an Azure Function. That was really the idea here: use Azure Functions as a scheduling mechanism for a very simple script. (And, yes, of course Azure Automation could do this, too.)

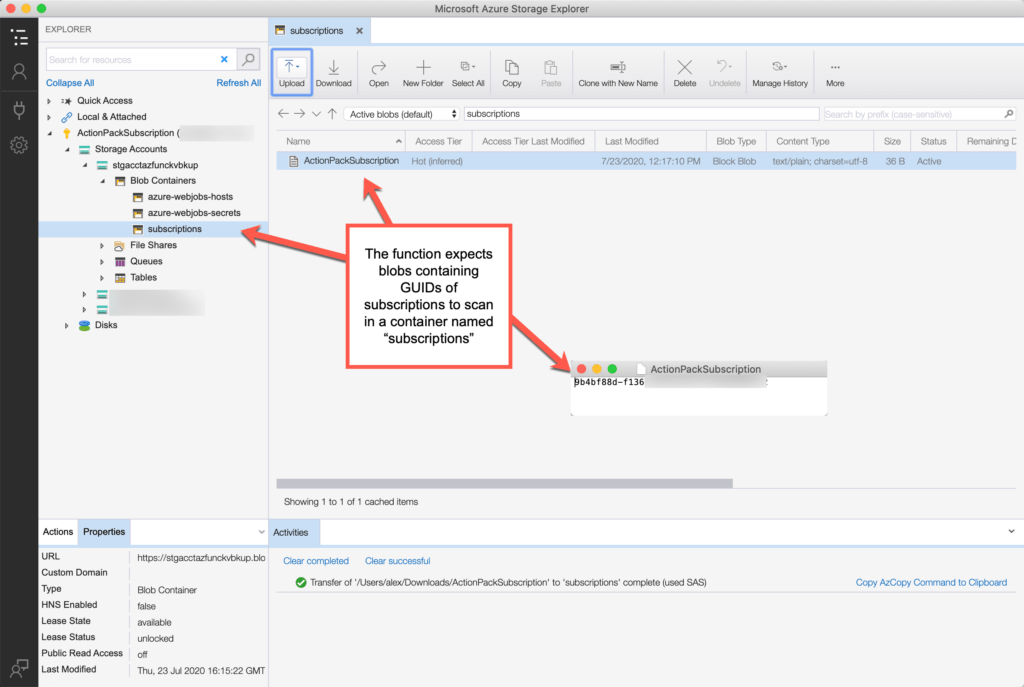

First, let’s take a look at the storage account we will have set up when deploying an empty function from VS Code to which I’ve added a container named subscriptions which the PowerShell function requires in order to know which subscriptions in a tenant to scan for key vaults.

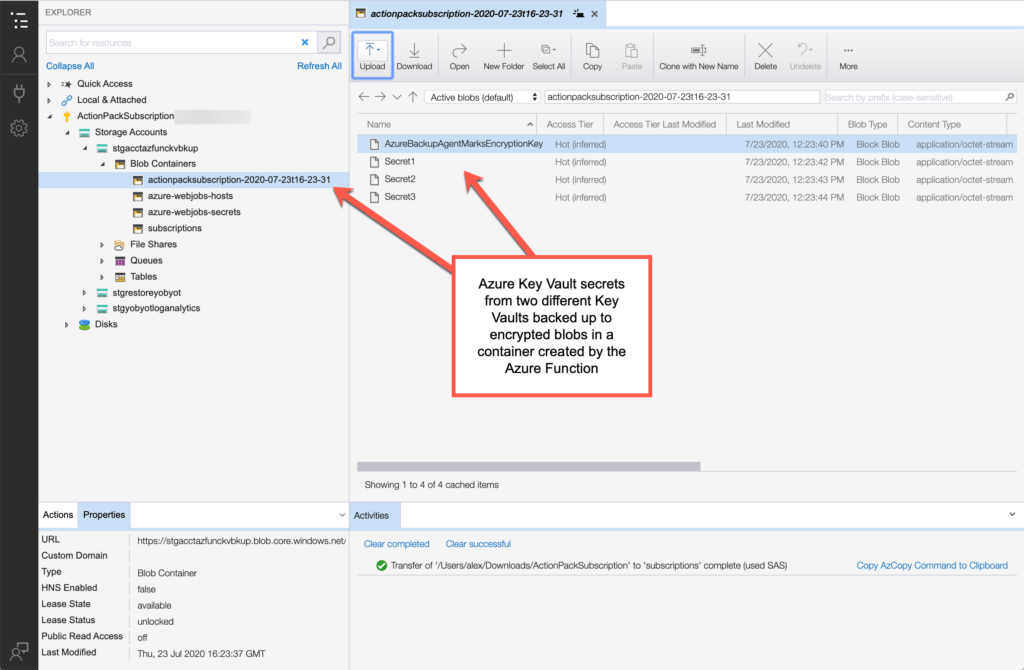

Here’s an image showing the same account after the first execution of the Azure Functions PowerShell script. Note that since storage account container names have restrictions on character counts and capitalization, the script creates a compliant but sortable container name. You can also see the secrets that were backed up. Once again, the secrets are from all key vaults in the subscription to which the function’s identity was granted access. So, you’ve “lost” the name of the key vault from which the backup was made — a good thing, IMHO if you need to recreate the key vault in a different region.

I have suggested you should create an empty function using the VS Code Azure Function extension and upload it to Azure. That’s so you can create a managed identity and assign it permissions in a key vault’s access policy. Note that function apps’ managed identity also needs at least RBAC Reader at the subscription level. The subscription-level RBAC Reader role isn’t shown here because you already know how to do this, right? 🙂

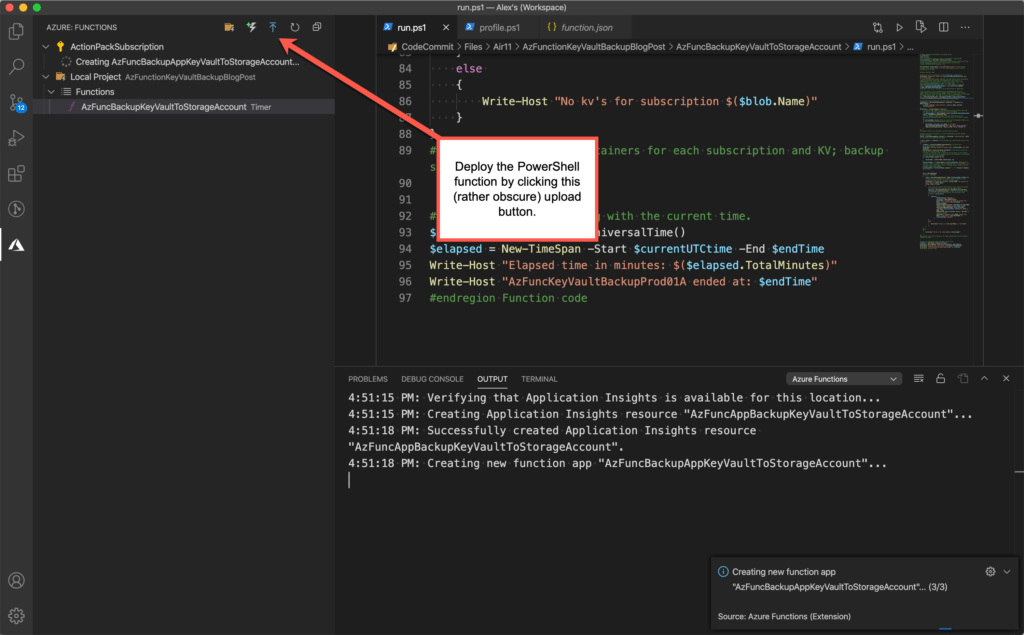

When it comes to actually deploying a PowerShell Azure Function to Azure, it should be as simple as clicking the upload button in the function as shown below. Note that if you want the local version of your function to show in VS Code — so you can run it locally and “debug” it (ha!), you must add it to a VS Code workspace.

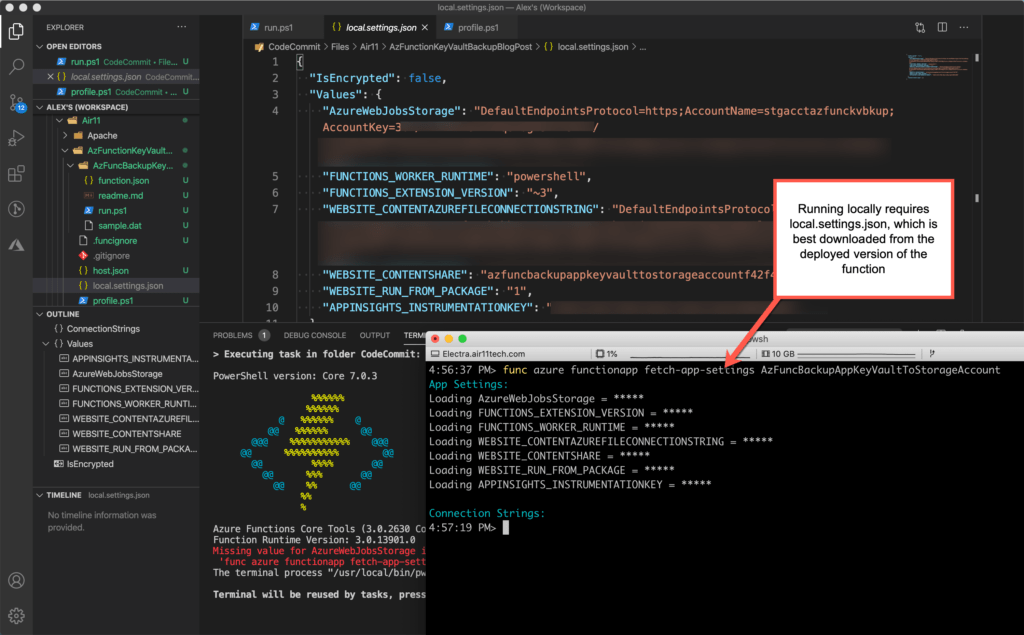

When you are running a PowerShell function locally using Core Tools, you may get errors from both VS Code and Core Tools. I spent hours trying to figure out what the errors were that VS Code presents in modal dialogs. Finally, I noticed the error — and the prescription — in the output from Core Tools. Here, you a see composite screenshot showing the error (partially) and the fix. This just means that in addition to learning in nuances of VS Code and the Azure Function extension and the VS Code PowerShell function, you also have to learn how to use the Core Tools commands.

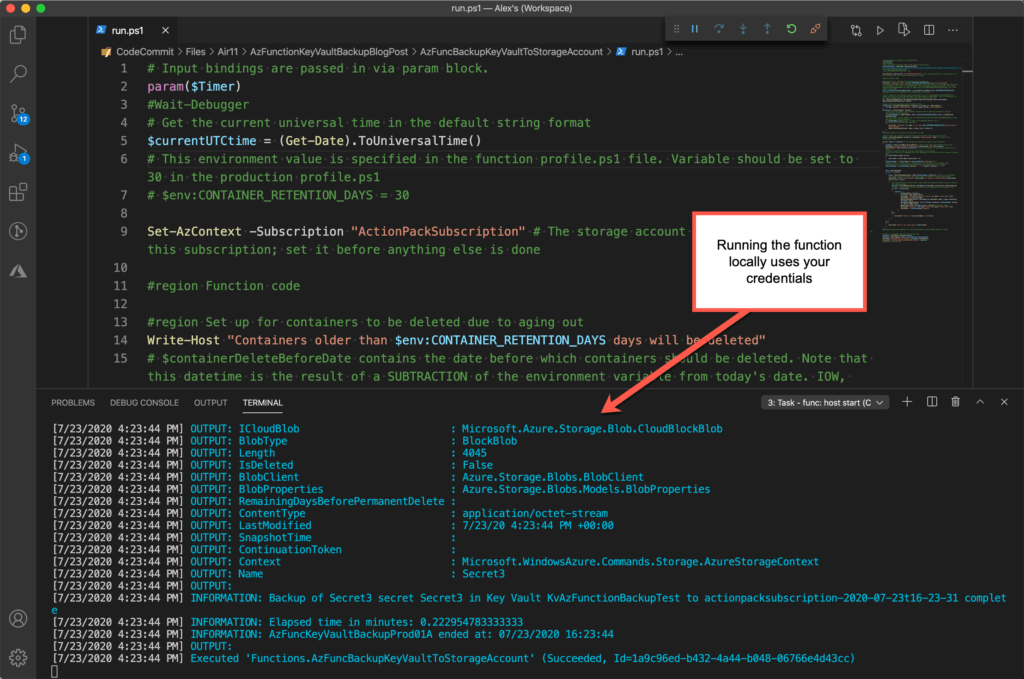

You should also be aware that when you run a function locally using Core Tools, your permissions — not the managed identity’s — are used. That can make the deployed PowerShell function fail if you haven’t set up the required RBAC roles and key vault access.

Finally, after all that, you’ve made it to the code! There are three files here. profile.ps1, function.json and run.ps1. profile.ps1 contains an environment variable that determines how many days a backup should be kept; otherwise it’s identical to the generated version. function.json shows an NCRONTAB setting I used to trigger the Azure Function daily at midnight UTC. Finally, run.ps1 is the actual Azure Function in PowerShell. I hope these are useful for you and help you explore the world of PowerShell and Azure Functions.

profile.ps1

# Azure Functions profile.ps1

#

# This profile.ps1 will get executed every "cold start" of your Function App.

# "cold start" occurs when:

#

# * A Function App starts up for the very first time

# * A Function App starts up after being de-allocated due to inactivity

#

# You can define helper functions, run commands, or specify environment variables

# NOTE: any variables defined that are not environment variables will get reset after the first execution

# Authenticate with Azure PowerShell using MSI.

# Remove this if you are not planning on using MSI or Azure PowerShell.

if ($env:MSI_SECRET -and (Get-Module -ListAvailable Az.Accounts)) {

Connect-AzAccount -Identity

}

# Uncomment the next line to enable legacy AzureRm alias in Azure PowerShell.

# Enable-AzureRmAlias

# You can also define functions or aliases that can be referenced in any of your PowerShell functions.

# Set the default number of days after which the function will delete backup containers

$env:CONTAINER_RETENTION_DAYS = 30

function.json

{

"bindings": [

{

"name": "Timer",

"type": "timerTrigger",

"direction": "in",

"schedule": "0 0 5 * * *"

}

]

}

run.ps1

# Input bindings are passed in via param block.

param($Timer)

#Wait-Debugger

# Get the current universal time in the default string format

$currentUTCtime = (Get-Date).ToUniversalTime()

# This environment value is specified in the function profile.ps1 file. Variable should be set to 30 in the production profile.ps1

# $env:CONTAINER_RETENTION_DAYS = 30

Set-AzContext -Subscription "YourAzureSubscriptionName" # The storage account we're working with is in this subscription; set it before anything else is done

#region Function code

#region Set up for containers to be deleted due to aging out

Write-Host "Containers older than $env:CONTAINER_RETENTION_DAYS days will be deleted"

# $containerDeleteBeforeDate contains the date before which containers should be deleted. Note that this datetime is the result of a SUBTRACTION of the environment variable from today's date. IOW, $containterDeleteBeforeDate is equal to today minus $env:CONTAINER_RETENTION_DAYS days. It's that number of days AGO.

[datetime]$containterDeleteBeforeDate = $currentUTCtime.AddDays(-$env:CONTAINER_RETENTION_DAYS)

#endregion Set up for containers to be deleted due to aging out

# Get a context to the storage account containing the list of subscriptions containing KVs to backup along with the subscription GUIDs. Note that names are HARD CODED - this is intentional.

$c = (Get-AzStorageAccount -StorageAccountName "yourstorageacct" -ResourceGroupName RgAzureKeyVaultBackupFunction).Context

#region Delete old containers and the blobs in them

$containers = Get-AzStorageContainer -Container * -Context $c | Where-Object {

($_.Name -notlike 'subscriptions') -and ($_.Name -notlike 'azure-webjobs*')

}

$containers | Foreach-Object -Process {

# Calculate the number of days between the delete before date and the date of the last modification of the container.

[int]$elapsedDays = (New-TimeSpan -Start $containterDeleteBeforeDate -End $_.LastModified.DateTime).Days

# If the number of days between the last modified date and $containterDeleteBeforeDate is less than zero, it's time to delete it.

if ($elapsedDays -le 0) # IOW, at least 30 (recommended default set in $env:CONTAINER_RETENTION_DAYS) days AGO.

{

Write-Host "Container $($_.Name) is at least $env:CONTAINER_RETENTION_DAYS days old and is being deleted."

Remove-AzStorageContainer -Name $_.Name -Force -Context $c

}

}

#endregion Delete old containers and the blobs in them

#region Create new containers for each subscription and KV; backup secrets to blobs

$blobs = Get-AzStorageBlob -Context $c -Container "subscriptions" -Blob "*" # Get all the subscription blobs

foreach ($blob in ($blobs)) # Loop though each subscription blob in the collection

{

$subGuid = $blob.ICloudBlob.DownloadText() # Extract the subscription GUID from the blob

Write-Host "Setting context to subscription $($blob.Name) with GUID $subGuid"

Set-AzContext -SubscriptionId $($subGuid) # Set the Az context to the current subscription in $blob

# Create a container name to hold the secret blobs in this backup

# Since date time will be appended and is 19 characters, we can have a max of 44 chars in $blob.Name

If (($blob.Name).Length -gt 44)

{

$blob.Name = ($blob.Name).Substring(0, 43)

}

$containerName = "$($blob.Name)-$(($currentUTCtime).ToString("s"))"

# Nasty, nasty: no colons and all lowercase and < 63 characters is a container name requirement. Still, container names should still be sortable after this munging

$containerName = $containerName.replace(":", "-").ToLower().Replace(" ", "-")

$kvs = Get-AzKeyVault

if ($null -ne $kvs)

{

$stgc = New-AzStorageContainer -Name $containerName -Context $c # Create a new container with today's date to hold all the secrets from all the KVs to which we have access

Write-Host "Created new container $($stgc.Name) for secrets in subscription $($blob.Name)"

foreach ($kv in $kvs)

{

# For each KV, get all the secrets, then create the backup for that secret as a blob in the storage account

$secrets = Get-AzKeyVaultSecret -VaultName $kv.VaultName -ErrorAction SilentlyContinue # Create a collection of all the secrets that are accessible by the function's MSI

if ($null -ne $secrets)

{

$secrets | `

ForEach-Object -Process {

$filename = New-TemporaryFile

Write-Host "Attempting backup of secret $($_.Name) in Key Vault $($kv.VaultName) to $containerName"

Backup-AzKeyVaultSecret -VaultName $kv.VaultName -Name $_.Name -OutputFile $filename -Force

Set-AzStorageBlobContent -File $filename -Container $containerName -Context $c -Blob $_.Name -Force

Remove-Item -Path $filename -Force | Out-Null # Suppress output

Write-Host "Backup of $($_.Name) secret $($_.Name) in Key Vault $($kv.VaultName) to $containerName complete"

}

}

else

{

Write-Host "Access to $($kv.VaultName) is forbidden"

}

}

}

else

{

Write-Host "No kv's for subscription $($blob.Name)"

}

}

#endregion Create new containers for each subscription and KV; backup secrets to blobs

# Write an information log with the current time.

$endTime = (Get-Date).ToUniversalTime()

$elapsed = New-TimeSpan -Start $currentUTCtime -End $endTime

Write-Host "Elapsed time in minutes: $($elapsed.TotalMinutes)"

Write-Host "Azure key vault secret backup ended at: $endTime"

#endregion Function code

Leave a Reply